Sequoia US: GenAI is a 10x productivity revolution

TechFlow Selected TechFlow Selected

Sequoia US: GenAI is a 10x productivity revolution

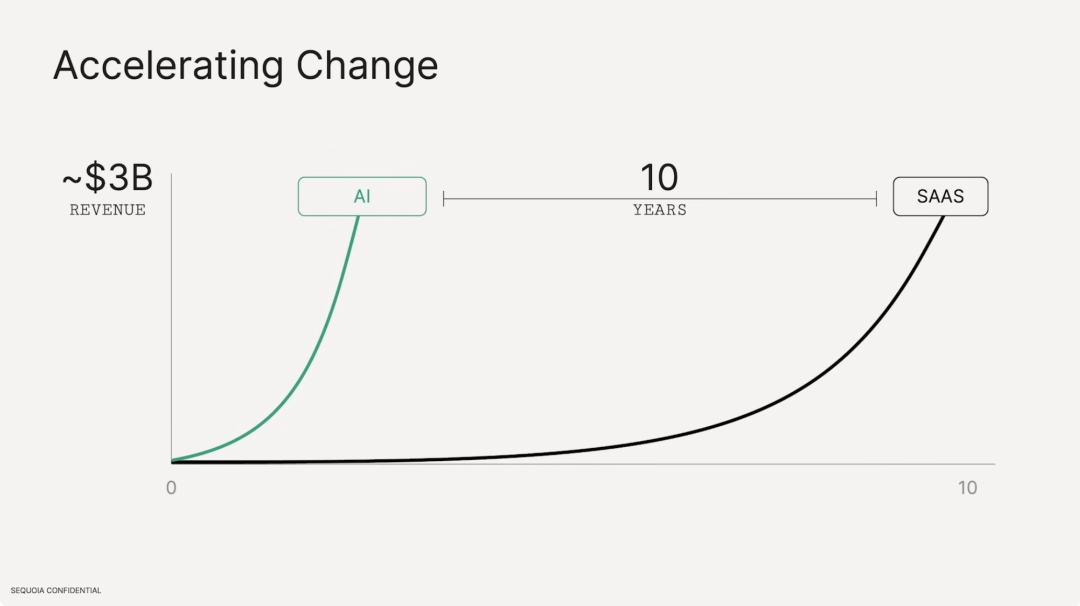

GenAI generated approximately $3 billion in revenue last year, a figure that took SaaS ten years to achieve.

Translated by Lavida

In September 2022, Sequoia Capital US's research report titled "Generative AI: A Creative New World" sparked the first wave of discussions around Generative AI. The subsequent launch of ChatGPT and GPT-4 further accelerated development in the GenAI field. At Sequoia’s AI Ascent conference, several partners offered a systematic retrospective on GenAI’s progress over the past year and a half—progress that has been far more rapid than most anticipated.

Unlike previous waves of AI, GenAI has already achieved remarkable results over the past year: it generated approximately $3 billion in total revenue within just one year of emergence—excluding indirect revenues earned by tech giants and cloud providers through AI. In contrast, the SaaS industry took nearly 10 years to reach this level. On the ground, GenAI is already creating tangible value in industries such as customer service, law, and content creation.

Although the application layer hasn't exploded as optimistically predicted a year ago, Sequoia partners point out that with increasingly intelligent foundation models like Sora and Claude-3 recently rolling out, product-market fit (PMF) cycles for AI products will undoubtedly accelerate. Moreover, it takes time for new technologies to mature, and revolutionary applications similarly require time to emerge. During the mobile internet era, iconic apps like Instagram and DoorDash only appeared years after the iPhone and App Store were launched.

Below is the article outline—recommended for targeted reading.

01 Why Now: From Cloud Computing to AI

02 Present: AI Is Everywhere

03 Future: Everything Is Generated

Over the past year, the market has gone through a complete AI Hype Cycle: from excessive hype during the bubble phase to disappointment and skepticism in the trough of disillusionment. Now, the market is climbing back toward the Plateau of Productivity. It’s becoming clear that LLMs and AI deliver real impact through three core capabilities—creation, reasoning, and interaction—and these are being integrated into applications across domains.

The three core capabilities of AI: Creation, Reasoning, and Interaction

AI now possesses both creative and reasoning abilities. For example, GenAI can generate text, images, audio, and video, while chatbots can answer questions or act as agents to plan multi-step tasks—capabilities no prior software could achieve. This means software can now handle both right-brain creative tasks and left-brain logical processing simultaneously—marking the first time software can interact with humans in a human-like way, with profound implications for business models.

Why Now: From Cloud Computing to AI

Sequoia partner Pat Grady addressed the question “Why is AI booming now?” by reviewing the evolution of the cloud industry over the past two decades.

Pat argues that cloud computing represented a major technological shift—it disrupted the old tech landscape and gave rise to new business models, applications, and modes of human-computer interaction. In 2010, when the cloud was still nascent, the global software market was worth about $35 billion, with cloud software accounting for only $6 billion. By last year, the total software market had grown to $650 billion, with cloud software generating $400 billion in revenue. This translates to a CAGR of 40% over 15 years—an extraordinary growth trajectory.

The rise of cloud provides a strong analogy for AI. Cloud replaced traditional software because it enabled more human-like interactions; similarly, today’s AI technology has reached new heights in creativity, logical reasoning, and human-machine interaction. One major future opportunity for AI lies in replacing services with software. If this transformation materializes, AI’s market potential won’t be measured in hundreds of billions—but tens of trillions of dollars. We may well be standing at the most valuable and transformative moment in history.

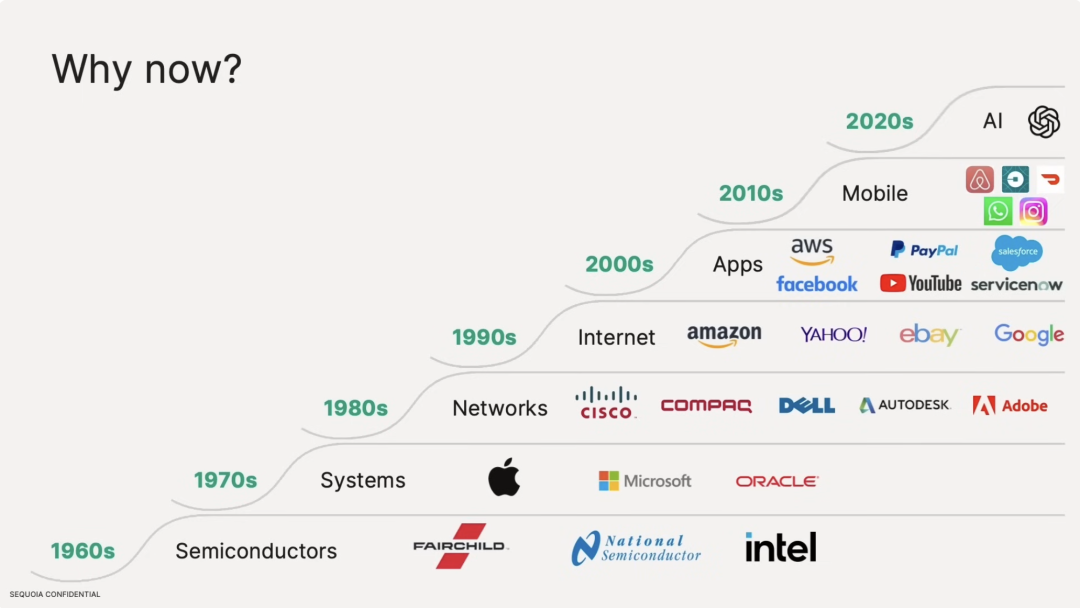

Key technological shifts since the 1960s and their representative companies

As to why now is such a pivotal moment for AI, Pat Grady notes that since its founding, Sequoia has witnessed multiple technological revolutions and benefited from them. Over time, the firm has developed a deep understanding of how different tech waves build upon each other to drive progress:

-

1960s: Sequoia founder Don Valentine led marketing at Fairchild Semiconductor—the origin of the term “Silicon Valley” due to its silicon transistors;

-

1970s: Computers were built atop semiconductor chips;

-

1980s: Networking connected PCs, giving birth to the software industry;

-

1990s: The internet emerged, transforming communication and consumption;

-

2000s: The internet matured, enabling complex applications—cloud computing arose;

-

2010s: The proliferation of mobile devices ushered in the mobile internet era, reshaping work once again.

Each technological wave builds on the last. Although AI was conceptualized as early as the 1940s, it only became practical, commercialized, and capable of solving real-world problems in recent years—thanks to key enablers such as:

-

Abundant and affordable compute power;

-

Fast, reliable, and efficient networks;

-

Global smartphone adoption;

-

Digital acceleration driven by the pandemic;

-

All of which generated vast amounts of data for AI training.

Pat Grady believes that AI will define the next 10–20 years. Sequoia holds this conviction strongly—even if it remains to be proven.

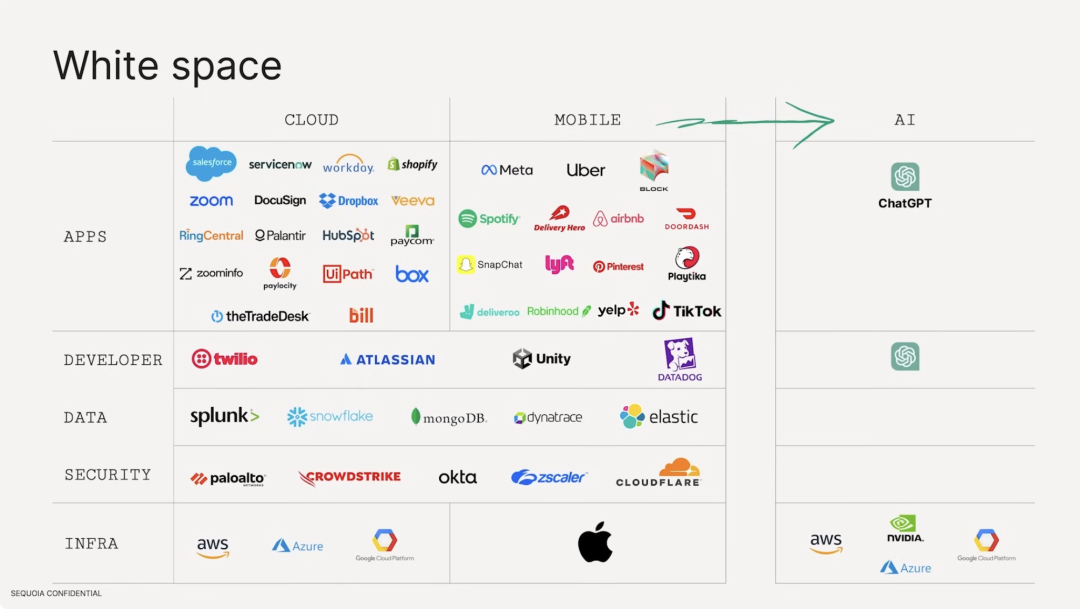

From Cloud and Mobile to AI-era companies

Regarding the future competitive landscape of AI, Pat Grady first reviewed companies from the Cloud and Mobile eras that achieved over $1 billion in revenue (shown on the left side of the chart above). While the far-right column representing AI is still largely blank, it symbolizes immense latent value and opportunity. Pat predicts that within the next 10–15 years, this empty space will be filled with 40–50 new company logos—the very source of excitement for investors.

Present: AI Is Everywhere

Sequoia partner Sonya Huang began by reviewing AI advancements over the past year across customer service, legal, programming, and video generation.

AI applications across industries

2023 was a landmark year in AI history. A year and a half after ChatGPT’s debut, the industry continues to evolve rapidly. Where people once speculated about how AI might transform various fields and boost productivity, AI has now become central to mainstream discourse.

Klarna CEO Sebastian Siemiatkowski’s tweet on X

In customer service, Klarna’s CEO Sebastian publicly shared that the company now uses OpenAI to handle two-thirds of customer inquiries, effectively replacing the work of 700 full-time agents. With tens of millions of call center agents globally, this demonstrates, according to Sonya, that AI has already achieved PMF in customer support.

Legal services, once considered among the most risk-averse and reluctant to adopt technology, now sees startups like Harvey automating everything from routine paperwork to advanced legal analysis.

In programming, we’ve moved rapidly from using AI to write code to having fully autonomous AI software engineers. Companies like HeyGen use AI to generate avatars that can participate in Zoom meetings.

Avatar generated by Sequoia’s Pat Grady using HeyGen during a Zoom meeting

Tenfold Growth of GenAI

Revenue growth comparison between AI and SaaS

Estimates suggest that GenAI generated around $3 billion in total revenue within its first year—excluding income from tech giants and cloud providers via AI. By comparison, SaaS took nearly 10 years to reach this milestone. This unprecedented speed reinforces confidence that GenAI is here to stay.

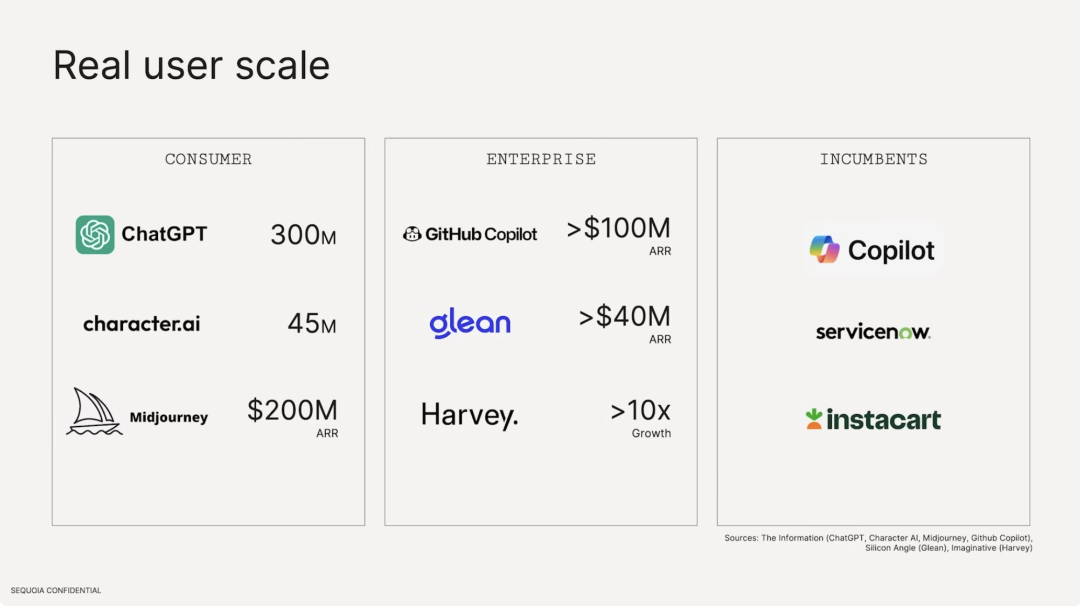

Actual user scale of major GenAI products

The chart shows demand for AI spans far beyond one or two applications—it’s widespread. While many know ChatGPT’s user count, examining usage and revenue data across numerous AI tools reveals that whether B2B or B2C, startups or established players, many AI products have already found solid PMF across diverse sectors.

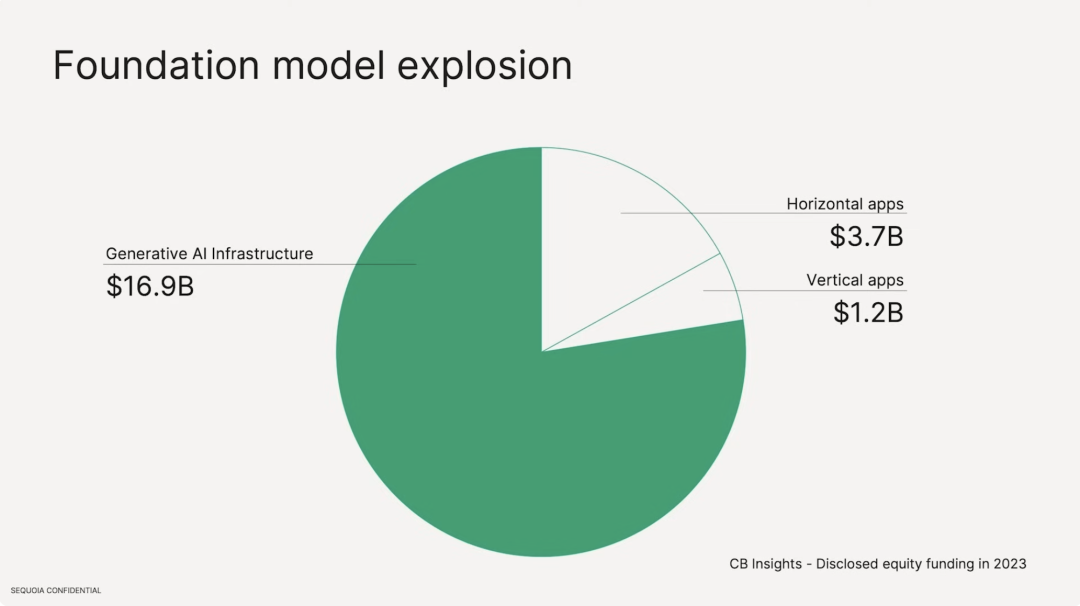

Funding distribution between foundation models and application layers

From an investment perspective, funding imbalance is evident. If GenAI were a cake, the base would be foundation models, the middle layer developer tools and infra, and the top layer applications. A year ago, expectations were that advances in foundation models would spark an explosion of new app companies. The reality has been the opposite: more foundation model startups have emerged and raised significant capital, while the application layer appears only to be getting started.

AI’s $200 Billion Question

Sequoia US partner David previously published a piece titled “AI’s $200 Billion Question.” Looking at investments in GPUs alone, about $50 billion was spent last year just on Nvidia chips, yet confirmed AI industry revenue stands at only $3 billion. These figures show the AI sector remains extremely early-stage, with low ROI and many unresolved challenges.

MAU, DAU, and month-over-month retention rates for AI products vs. mobile apps

Despite impressive user numbers and revenue, AI products still lag significantly behind mobile apps in DAU, MAU, and month-over-month retention. Many users report a gap between expectations and actual experience. Some demos look flashy but underdeliver in practice, leading to short-term engagement.

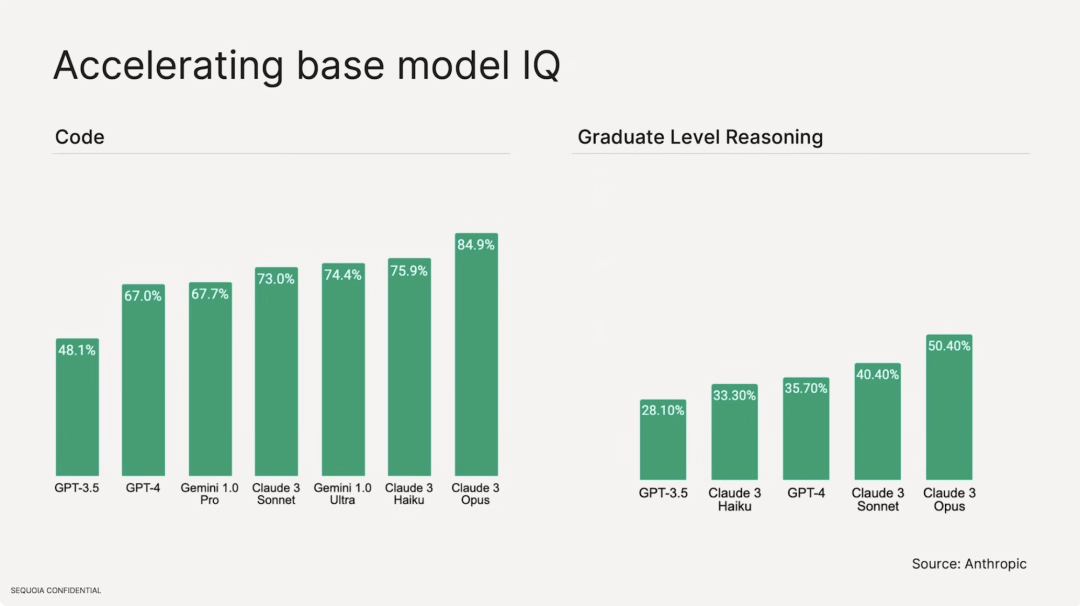

Improvements in foundation model capabilities

These are real issues—but also opportunities. Massive corporate investments in GPUs over the past year have yielded smarter foundation models. Recent releases like Sora, Claude-3, and Grok demonstrate rising baseline intelligence levels in AI, suggesting PMF for future AI products will accelerate.

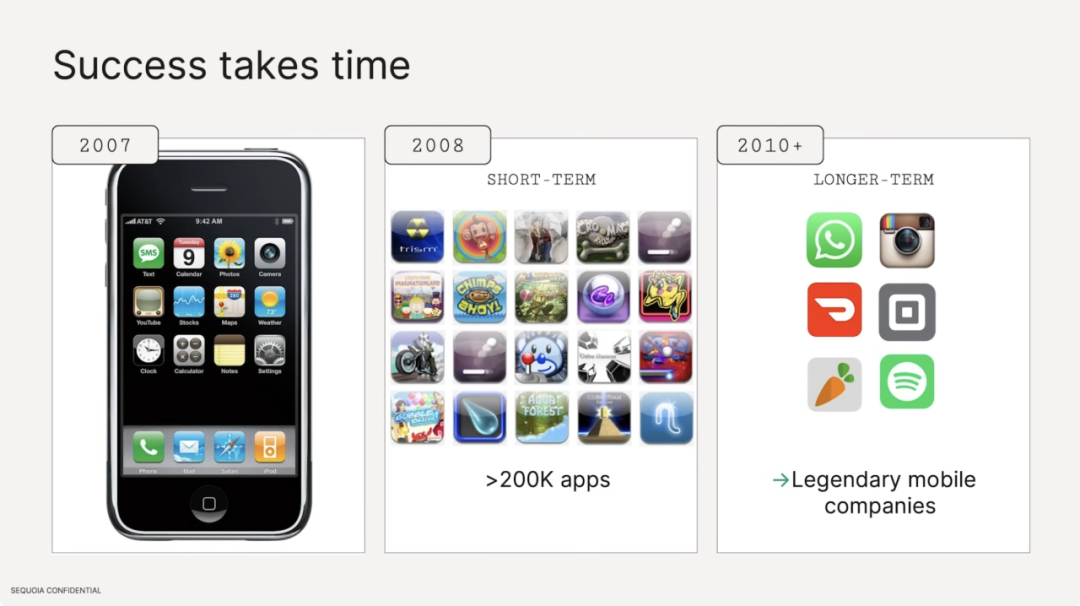

Evolution of the iPhone and App Store

New technologies take time to mature, and breakthrough applications take time to emerge. In the early days of the iPhone, many initial App Store apps were primitive—mere demonstrations of new tech without real utility. Flashlight apps or simple beer-drinking games eventually became system features or trivial novelties. Truly impactful apps like Instagram and DoorDash only emerged years after the iPhone and App Store launched.

AI is undergoing a similar evolution. Many current AI applications remain in demo or early exploration phases—akin to those early App Store apps—but the next generation of legendary companies may already be emerging.

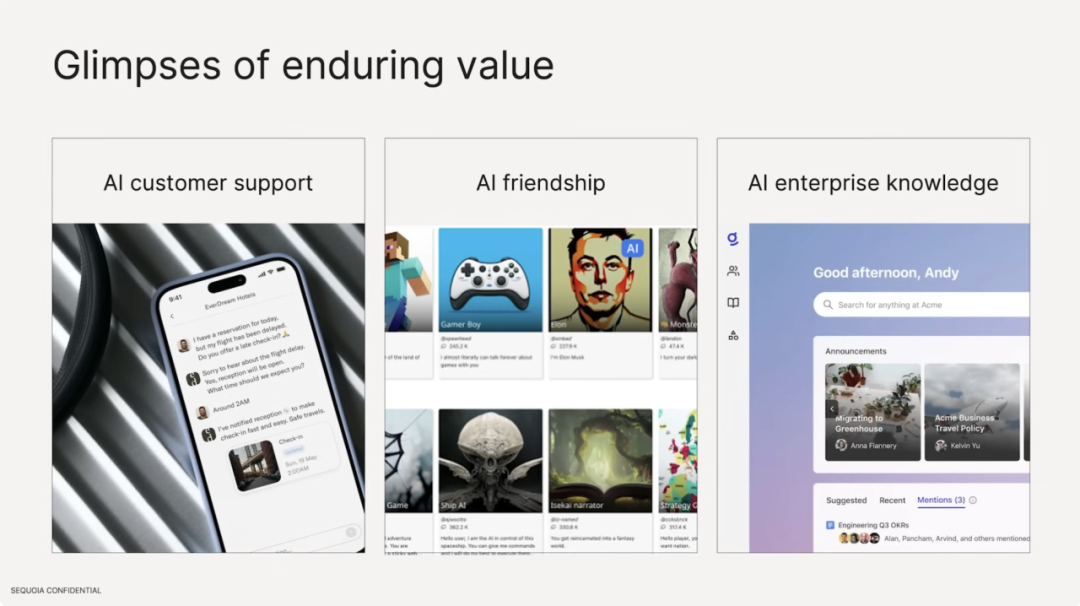

AI use cases are now highly diverse, with three particularly notable areas: AI customer support, AI Friendship (AI virtual companionship), and enterprise knowledge management. Customer support is one of the first enterprise functions where AI has clearly achieved PMF. Klarna is not an exception but part of a broader trend. AI friendship is one of the most surprising success stories, with strong user engagement metrics indicating genuine affection. Additionally, horizontal enterprise knowledge sharing across departments and roles holds substantial promise.

Future: Everything Is Generated

Four Predictions for AI in 2024

Based on the above analysis, Sequoia partners offer four predictions for AI in 2024.

Prediction One: Copilots will evolve into AI Agents.

In 2024, AI will transition from being a human assistant (copilot) to a true agent capable of independently performing certain human tasks. AI will begin to feel more like a colleague than a tool—a shift already visible in software engineering and customer service.

Prediction Two: Models will gain stronger planning and reasoning capabilities.

Critics argue LLMs merely replicate statistical patterns from training data rather than engaging in true reasoning. However, new research directions aim to address this. Techniques exploring improved reasoning computation and gameplay-style value iteration allow models to simulate “thinking time” before decisions. These advances are expected to enable AI to perform higher-level cognitive tasks like planning and reasoning by next year.

Gameplay-style value iteration is a concept borrowed from reinforcement learning, where models evaluate the long-term value of different actions and plan accordingly—similar to strategic thinking in chess or video games.

Prediction Three: LLM accuracy will improve, expanding from consumer entertainment to enterprise applications.

In consumer applications, users are generally tolerant of AI errors since they’re often used for entertainment. But in high-stakes enterprise settings like healthcare or defense, accuracy and reliability are critical. Researchers are developing tools like RLHF, prompt training, and vector databases to help LLMs achieve “five nines” (99.999%) uptime and precision.

Prediction Four: Many AI prototypes and experimental projects will enter production.

2024 is expected to see a surge of AI prototypes and experiments transitioning into real-world deployment. Unlike lab environments, real-world usage demands attention to latency, cost, model ownership, and data governance. This signals a shift in computational focus—from pre-training to inference. Thus, 2024 will be a crucial year requiring careful execution to ensure successful scaling.

The Long-Term Impact of AI

Judgment 1: AI is a massive cost-driven productivity revolution.

There are many types of technological revolutions—the telephone brought a communications revolution, trains transformed transportation, and agricultural mechanization drove a productivity revolution. AI clearly represents a productivity revolution.

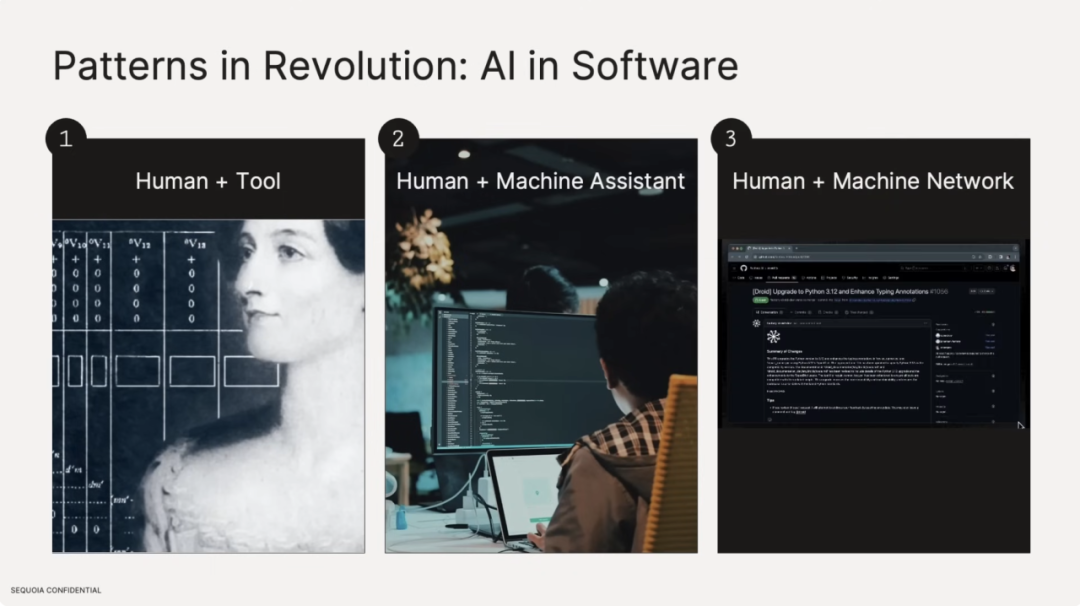

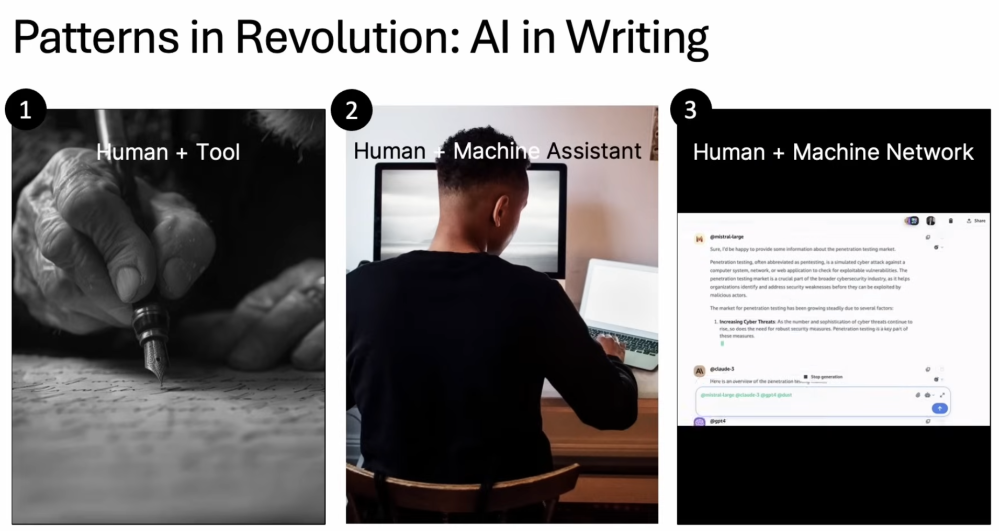

Historical productivity revolutions follow a common pattern: first, humans use tools; then, humans work alongside machines; finally, humans collaborate with networked, coordinated systems. This suggests AI will evolve from isolated tools to highly integrated networks—fundamentally transforming how we work and produce.

Evolution from sickles to combine harvesters

In agriculture, humans used hand sickles for over 10,000 years. Then came mechanical reapers in 1831, and today we have complex networked combine harvesters composed of tens of thousands of interconnected machines—each acting as an agent.

A similar progression exists in knowledge work and writing. Early tools included pen and paper, later evolving into programming, and now computers and IDEs assist at scale. Software development will no longer be a solitary act but a collaborative process involving networked machines—multiple agents jointly generating code.

Writing was once purely manual, then evolved into human-machine collaboration, and now involves multiple coordinated tools. Today’s AI assistants aren’t limited to GPT-4—they include Mistral-Large, Claude-3, and others, often cross-verified to produce better answers.

AI drives down costs across industries

The societal impact of a productivity revolution is broad and deep. Economically, it means significant cost reductions. The chart above shows that the number of employees required per $1 million in revenue among S&P 500 companies is rapidly declining. This means we can accomplish more work faster and with fewer people—not that there’s less to do, but that we can do more within the same timeframe.

Technological progress historically leads to deflation. Due to continuous innovation, software prices keep falling. Yet in society’s most vital sectors—education, healthcare, housing—prices have risen far faster than inflation. AI offers a powerful lever to reduce costs in these critical areas.

Thus, the first key insight into AI’s long-term impact is: AI will drive a massive cost-led productivity revolution, enabling us to achieve more with fewer resources in essential societal domains.

Judgment 2: Everything Will Be Generated

The second judgment focuses on what AI can ultimately do.

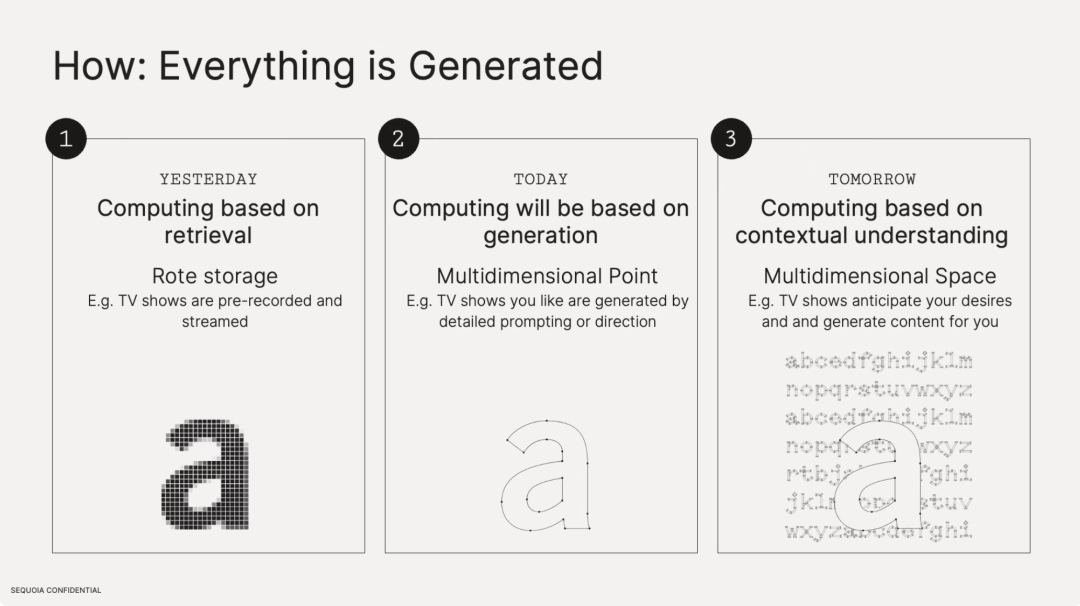

A year ago, Jensen Huang made a bold prediction: in the future, images won’t be rendered—they’ll be generated. This signifies a shift from storing information as pixel matrices to representing it as multidimensional concepts. Take the letter “a”: traditionally stored as ASCII code 97, computers now go beyond pixels to understand the conceptual meaning of “a” as an English letter in context.

More powerfully, computers can not only interpret these multidimensional representations and render them visually but also contextualize them—understanding “a” not as an isolated symbol but as part of linguistic meaning. For instance, seeing the word “multidimensional,” a computer doesn’t fixate on the letter “a” but grasps the full semantic context.

This mirrors core aspects of human cognition. When we learn the letter “a,” we don’t memorize a pixel grid—we grasp an abstract concept. This idea traces back 2,500 years to Plato’s theory of forms, which posits that behind all physical things lies an eternal realm of perfect ideals—echoing how AI learns through abstraction.

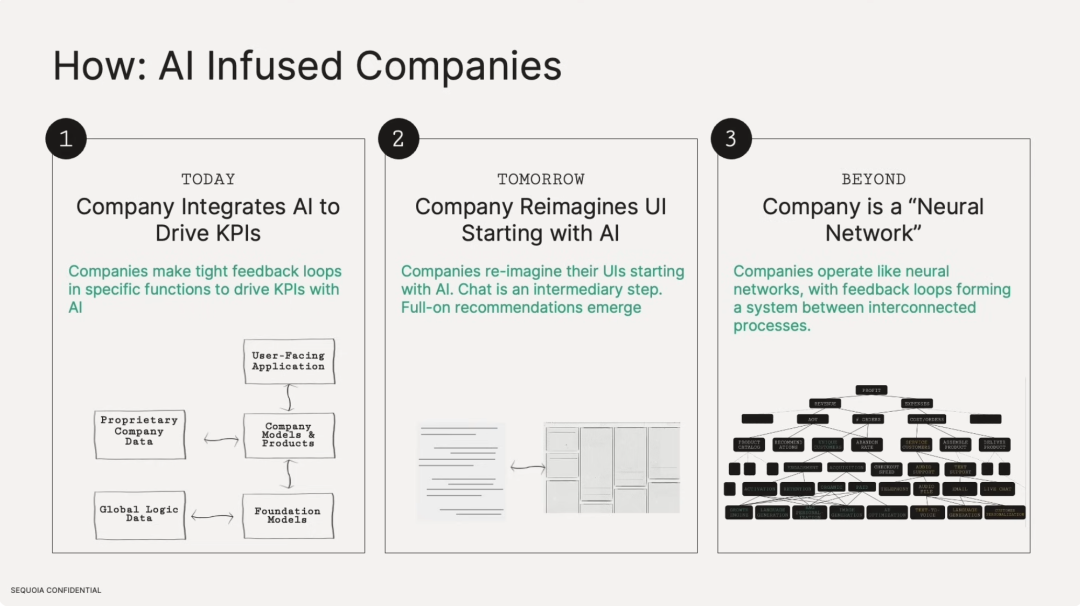

This shift has profound implications for enterprises. Companies are already integrating AI into specific workflows and KPIs—for example, Klarna improving customer support performance via AI-powered information retrieval, enhancing user experience. This transformation brings new user interfaces, potentially unlike any we’ve seen before in customer interactions.

This trend is significant because it suggests organizations may eventually operate like neural networks—interconnected, self-optimizing systems that learn, adapt, and continuously improve efficiency.

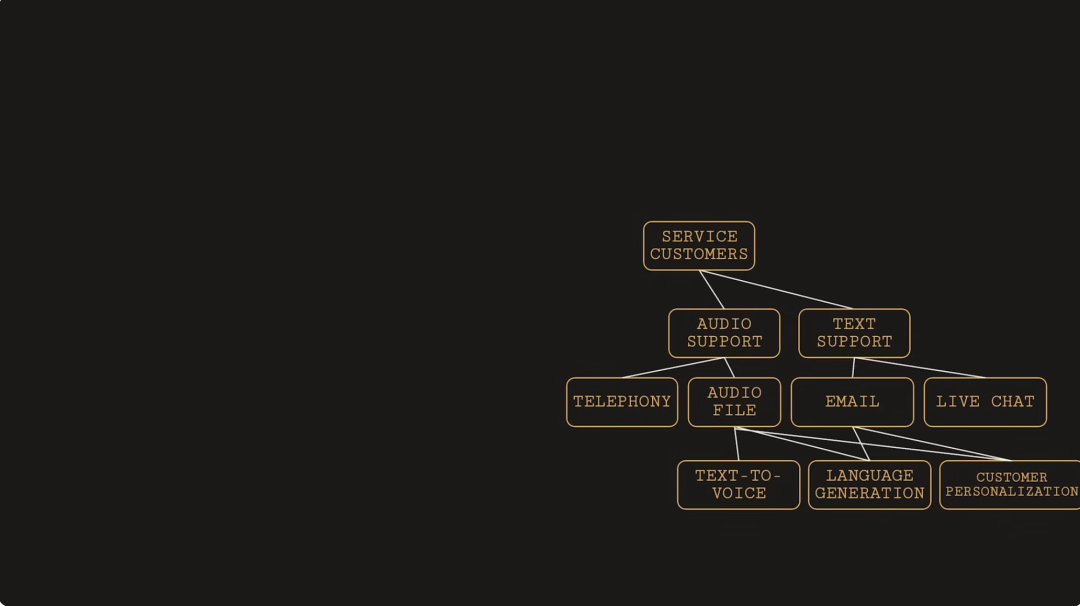

Take customer support: the diagram above illustrates a simplified workflow. Support teams have KPIs influenced by factors like text-to-speech, language generation, and personalization—forming subtrees in an optimization tree. Language generation feedback directly impacts final KPIs. Using such abstractions, entire support processes can be managed, optimized, and refined by neural networks.

Consider customer acquisition. Through AI-driven language generation, growth engines, and ad customization and optimization, businesses can better meet individual customer needs. Interactions among these technologies enable companies to function like neural networks—self-learning and adaptive. Individuals will accomplish more, fueling the rise of solo entrepreneurs and one-person companies.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News