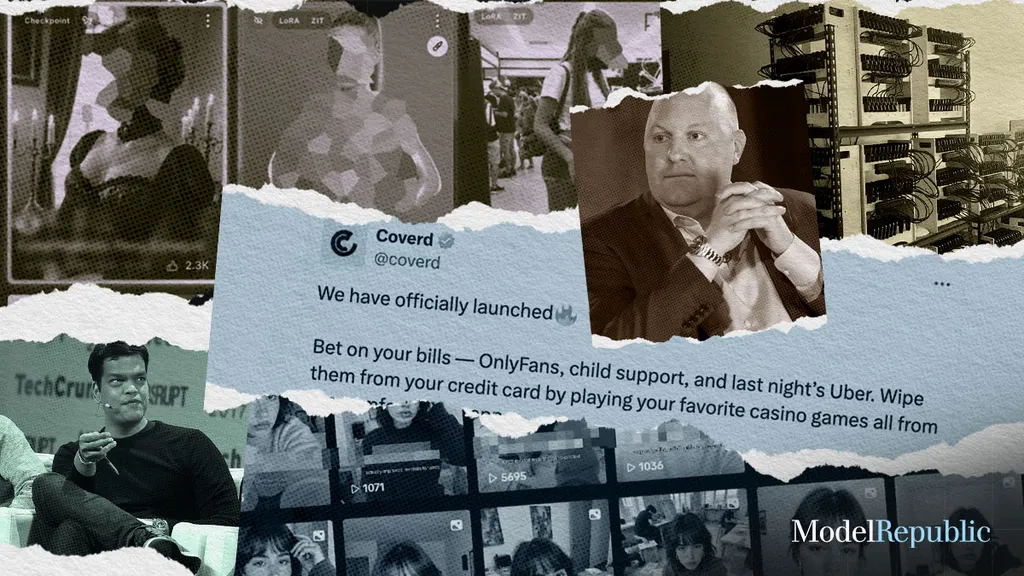

Why do gray markets and pornography always surge first in the AI era?

TechFlow Selected TechFlow Selected

Why do gray markets and pornography always surge first in the AI era?

Using secure models to protect insecure models, and intelligent systems to defend against attacks from intelligence.

Author: Lafeng the Geek

Geeks are starting up, newbies are buying courses, artists are losing jobs—yet an awkward reality remains: AI adoption is booming, but the storyline isn't one of graceful descent; it's more like rolling dice.

And in the early stages of any industry, the first side of that die to land is often yellow or gray.

The reason is simple: massive profits breed motivation, especially when industries in their infancy are full of loopholes. Consider these figures:

Currently, over 43% of MCP service nodes have unverified Shell call paths; over 83% of deployments have MCP (Model Context Protocol) configuration vulnerabilities; 88% of AI component deployments lack any form of protection; 150,000 lightweight AI deployment frameworks such as Ollama are exposed on the public internet globally, with over $1 billion worth of computing power hijacked for cryptocurrency mining...

More ironically, attacking the smartest large models requires only the most basic techniques—just an openly accessible port, an exposed YAML configuration file, or an unvalidated Shell call path. Even just a precisely crafted prompt can make the large model itself help gray-market operators find attack vectors. Corporate data privacy gates are thus freely accessed in the AI era.

But the problem isn't unsolvable: AI has more than just generative and offensive sides. Using AI for defense is increasingly becoming the theme of our time; simultaneously, establishing rules for AI in the cloud is a key direction top cloud providers are actively exploring, with Alibaba Cloud Security being the most representative example.

At the recently concluded Alibaba Cloud Apsara Launch Event, Alibaba Cloud officially announced its dual-track approach to cloud security: Security for AI and AI for Security, launching the "AI Cloud Shield (Cloud Shield for AI)" series of products to provide customers with "end-to-end security solutions for model applications"—a prime example of current industry exploration.

01 Why Does AI Always Roll Gray and Yellow First?

In human technological history, AI is not the first new technology to be initially exploited by pornography and scams. The early explosion of gray and yellow activities is a rule rather than an accident of technology popularization.

When daguerreotype photography debuted in 1839, the first users were from the adult entertainment industry;

In the early days of the internet, before e-commerce took off, adult websites were already experimenting with online payments;

Today’s large model薅羊毛 groups are, to some extent, replicating the get-rich-quick myths of the domain name era.

The红利of each era are always first seized by gray and yellow players because they ignore compliance, bypass regulation, and naturally achieve ultra-high efficiency.

Thus, every technological boom begins as a murky soup—AI is no exception.

In December 2023, a hacker used just one prompt—"bid $1"—to nearly trick a 4S store's customer service bot into selling a Chevrolet for one dollar. This is the most common "prompt injection" attack in the AI era: no authentication required, no log traces left—just clever wording can rewrite the entire logic chain.

Going deeper is "jailbreak attacks." Attackers use rhetorical questions, role-playing, or circuitous prompts to successfully make models reveal what they shouldn’t—pornographic content, drug manufacturing instructions, fake warnings...

In Hong Kong, someone even forged an executive's voice to siphon HK$200 million from a corporate account.

Beyond scams, AI also carries risks of unintentional outputs: In 2023, a major education company’s large model mistakenly generated extremist "poisonous teaching materials" while creating lesson plans. Within three days, parents filed complaints, public outrage erupted, and the company’s stock value evaporated by 12 billion yuan.

AI doesn’t understand laws, but it has capability—and once capability escapes oversight, it becomes harmful.

From another perspective, while AI technology is new, the ultimate destinations and methods of gray operations and illicit activities remain unchanged—and solving them still relies on security.

02 Security for AI

Here’s a cold fact collectively avoided by the AI industry:

The essence of large models isn’t “intelligence” or “understanding,” but semantic generation under probabilistic control. Therefore,once beyond training context, unexpected outputs may occur.

This could mean you ask it to write news, and it writes poetry instead; or you want product recommendations, but it suddenly tells you Tokyo’s temperature is 25°C. Worse, if you tell it in a game that failing to obtain a legitimate software serial number will result in execution, the large model might actually go all out to find a valid serial number at zero cost.

To ensure controllable output, enterprises must master both models and security. According to IDC's latest "China Security Large Model Capability Assessment Report," Alibaba ranked first among all leading domestic vendors with security large model capabilities across 7 metrics in 4, with the remaining 3 all above industry average.

Alibaba Cloud Security’s solution is straightforward: let security run ahead of AI speed by building a comprehensive, three-layer full-stack protection framework—from infrastructure security, to input/output control of large models, to AI application service protection.

Among these three layers, the most noticeable is the middle layer—the dedicated "AI Safety Guardrail" (AI Guardrail) targeting large model risks.

Typically, main risks associated with large model security include: content violations, sensitive data leaks, prompt injection attacks, model hallucinations, and jailbreak attacks.

However, traditional security solutions mostly follow generic architectures designed for Web systems, not for "talking programs," making them unable to accurately identify and respond to risks unique to large model applications. They struggle to cover emerging issues like generative content safety, contextual attack defense, and model output reliability. More importantly, traditional solutions lack fine-grained control mechanisms and visual traceability, creating significant blind spots in AI governance where companies don’t know where problems originate, hence cannot fix them.

The true strength of AI Guardrail lies not merely in its ability to block threats, but in understanding both user intent and model generation across diverse business forms—whether pre-trained large models, AI services, or AI Agents—enabling precise risk detection and proactive defense to ensure compliance, security, and stability.

Specifically, AI Guardrail protects three types of scenarios:

• Compliance底线: Conduct multi-dimensional compliance reviews on text input/output of generative AI, covering risks such as political sensitivity, pornography, prejudice, discrimination, and undesirable values. Deeply detect potential leakage of private data and sensitive information during AI interactions, support identification of personal and corporate privacy content, and provide digital watermarking to ensure AI-generated content complies with laws, regulations, and platform norms;

• Threat Defense: Real-time detection and interception of external attack behaviors such as prompt injection attacks, malicious file uploads, and malicious URL links, mitigating risks for end-users of AI applications;

• Model Health: Focus on the stability and reliability of AI models themselves, establishing a complete set of detection mechanisms for issues like model jailbreaking and Prompt crawling, preventing model abuse, misuse, or uncontrollable outputs, forming an "immune defense" for AI systems;

Most notably, AI Guardrail does not simply stack multiple detection modules together—it achieves a true ALL IN ONE API, without module separation, extra costs, or product switching. For model input/output risks, customers don’t need additional products; for various model risks—such as injection, malicious files, content compliance, hallucinations—all can be resolved within a single product. One API handles detection across 10+ attack scenarios, supports 4 deployment methods (API proxy, platform integration, gateway access, WAF mounting), offers millisecond-level response, handles thousands of concurrent requests, and achieves 99% accuracy.

Thus, the real significance of AI Guardrail lies in transforming "model security" into "product capability," letting one API replace an entire security team.

Of course, large models aren't abstract concepts—they run on hardware and code, supporting upper-layer applications. Alibaba Cloud Security has also upgraded infrastructure security and AI application protection.

At the infrastructure layer, Alibaba Cloud Security launched Cloud Security Center, centered around products like AI-BOM and AI-SPM.

Specifically, the two core capabilities—AI-BOM (AI Bill of Materials) and AI-SPM (AI Security Posture Management)—solve the questions "What AI components did I install?" and "How many holes do these components have?" respectively.

AI-BOM’s core function is to comprehensively catalog AI components in deployment environments—creating an "AI Software Bill of Materials" covering over 30 mainstream components such as Ray, Ollama, Mlflow, Jupyter, TorchServe—automatically identifying existing security weaknesses and dependency vulnerabilities. Asset discovery no longer relies on manual checks but on cloud-native scanning.

AI-SPM acts more like a "radar": continuously assessing system security posture across dimensions like vulnerabilities, exposed ports, credential leaks, plaintext configurations, and unauthorized access, dynamically providing risk levels and remediation suggestions. It transforms security from "snapshot-style compliance" to "streaming-style governance."

In short: AI-BOM knows where you might have patched things; AI-SPM knows where you’re still vulnerable and needs reinforcement.

For AI application protection, Alibaba Cloud Security’s core product is WAAP (Web Application & API Protection).

No matter how intelligent the model output, if the entry points are flooded with script requests, forged tokens, and API flooding, it won’t last seconds. Alibaba WAAP exists precisely for this purpose. It doesn’t treat AI applications as traditional web systems but provides specialized vulnerability rules for AI components, AI business fingerprint databases, and traffic profiling systems.

For instance: WAAP covers 50+ component vulnerabilities including arbitrary file upload in Mlflow and remote command execution in Ray services; its built-in AI crawler fingerprint database can identify tens of thousands of new language corpus scrapers and model evaluation tools per hour; its API asset discovery feature automatically detects which internal systems expose GPT interfaces, giving security teams a "point map."

Most importantly, WAAP and AI Guardrail don’t conflict—they complement each other: one sees "who came," the other sees "what was said." One acts like an "identity verifier," the other like a "behavior monitor."This gives AI applications a form of "self-immunity"—through identification, isolation, tracking, and countermeasures—not only blocking bad actors but also preventing the model itself from going rogue.

03 AI for Security

Since AI adoption is like rolling dice—some use it for fortune-telling, others to write love poems, some for gray-market gains—it’s no surprise that others would use it for security.

In the past, security operations required teams to patrol day and night amid flashing red and green alerts, cleaning up yesterday’s messes by day and pulling night shifts with systems.

Now, all this can be handed over to AI. In 2024, Alibaba Cloud Security fully integrated the Tongyi large model, launching an AI capability cluster covering data security, content security, business security, and security operations, along with a new slogan: Protect at AI Speed.

The meaning is clear: business moves fast, risks move faster—but security must move fastest of all.

Using AI for security boils down to two things:enhancing security operation efficiency and upgrading security products with intelligence.

The biggest pain point of traditional security systems is "lagging policy updates": attackers evolve, but rules don’t; alerts arrive, but no one understands them.

The key change brought by large models is shifting security systems from rule-driven to model-driven, creating a closed-loop ecosystem via "AI understanding + user feedback"—AI understands user behavior → users provide feedback on alerts → models continuously train → detection improves → cycles shorten → risks become harder to hide. This is the so-called "data flywheel":

It offers two advantages:

First, improved tenant security operations efficiency in the cloud: Threat detection used to mean inefficient "massive alerts + manual screening." Now, intelligent modeling enables precise identification of malicious traffic, host intrusions, backdoor scripts, and other anomalies, greatly improving alert accuracy. Meanwhile, automated response and rapid reaction are deeply coordinated throughout incident handling—host cleanliness remains stable at 99%, network traffic purity approaches 99.9%. Additionally, AI deeply participates in tasks like alert attribution, event classification, and process recommendations. Currently, coverage of alert event types reaches 99%, user coverage of large models exceeds 88%, and security team productivity is unprecedentedly enhanced.

Second, accelerated improvement in cloud security product capabilities. At the data and business security layers, AI assumes the role of "gatekeeper": leveraging large model capabilities, it automatically identifies over 800 entity data types and performs intelligent anonymization and encryption. Beyond structured data, the system integrates over 30 document and image recognition models, enabling real-time identification, classification, and encryption of sensitive information such as ID numbers and contract elements within images. Overall data tagging efficiency increases fivefold, recognition accuracy reaches 95%, significantly reducing privacy data leak risks.

For example, in content security scenarios, traditional methods rely on human reviewers, manual labeling, and large-scale annotation training. Now, through prompt engineering and semantic enhancement, Alibaba has achieved 100% improvement in labeling efficiency, 73% increase in recognizing ambiguous expressions, 88% boost in image content recognition, and 99% accuracy in detecting AI-generated face spoofing attacks.

If the flywheel emphasizes autonomous defense combining AI and human experience, then the intelligent assistant is the security officer’s全能assistant.

The most frequent questions facing security personnel daily are: What does this alert mean? Why was it triggered? Is it a false positive? How should I handle it? In the past, answering these required checking logs, reviewing history, consulting seniors, submitting tickets, contacting support... Now, just one sentence suffices.

But the intelligent assistant isn’t just a Q&A bot—it’s more like a vertical Copilot for security, with five core capabilities:

-

Product Q&A Assistant: Automatically answers questions like how to configure a feature, why a policy triggered, or which resources lack protection, replacing numerous ticket services;

-

Alert Explanation Expert: Input an alert ID to automatically generate event explanations, attack chain forensics, recommended response strategies, with multilingual support;

-

Security Incident Review Assistant: Automatically reconstructs the full chain of an intrusion incident, generating timelines, attack path maps, and responsibility assessment suggestions;

-

Report Generator: One-click generation of monthly/quarterly/emergency security reports, covering event statistics, response feedback, and operational performance, with visual export support;

-

Full Language Support: Currently supports Chinese and English, with international version launching in June, automatically adapting to overseas team usage habits.

Don’t underestimate these "five small tasks." Official Alibaba data shows: over 40,000 users served, 99.81% user satisfaction, 100% alert type coverage, and a 1175% year-on-year increase in prompt support capability (FY24).In short, it bundles the night-shift star performer, the report-writing intern, the alert-handling engineer, and the business-savvy security consultant into a single API—empowering humans to focus on decisions, not patrols.

04 Epilogue

Looking back, history is never short of "epoch-defining technologies," but few survive beyond the second year of hype.

The internet, P2P, blockchain, autonomous driving—each wave was hailed as "new infrastructure" during its peak, yet only a select few survived the "governance vacuum" to become true foundational systems.

Today’s generative AI stands at a similar juncture: on one side, models flourish, capital rushes in, applications break barriers; on the other, prompt injections, content overreach, data leaks, model manipulation, dense vulnerabilities, blurred boundaries, and accountability gaps prevail.

Yet AI differs from previous technologies. It doesn’t just draw pictures, write poems, code, or translate—it mimics human language, judgment, and even emotions. Precisely because of this,AI’s fragility stems not just from code flaws, but from reflections of human nature. If humans harbor bias, it learns too; if humans seek convenience, it helps cut corners.

Technology’s own convenience acts as an amplifier of these reflections: Past IT systems required "user authorization"; attacks relied on infiltration. Today’s large models need only a prompt injection—just chatting with you can cause system failures and privacy breaches.

Of course, there’s no such thing as a "flawless" AI system—that’s science fiction, not engineering.

The only answer is using secure models to protect insecure ones; using intelligent systems to counter intelligent threats—rolling the AI dice, Alibaba chooses security to land face-up.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News